Quick intro

Think of this as building a digital twin of a room or a person. Dozens of cameras shoot images to rebuild 3D shapes, and motion systems record how people move. That creates a lot of files fast.

Innovative · Efficient · Secure

From the MIT.nano Immersion Lab and LiDAR mapping to AI model delivery, disaster recovery, and SIEM observability, our patented solution consistently delivers compression beyond the limits of ZIP, 7z, and Zstd. Terabytes reduced to megabytes, with zero data loss.

A focused session captured high-resolution 3D scans, architectural space models, Unreal templates, and real-time mocap. Files were already compressed by their own formats (JPG, AVI), so ZIP/7z gave only marginal changes. We performed a capture-to-recovery cycle and verified every file using SHA-256.

Think of this as building a digital twin of a room or a person. Dozens of cameras shoot images to rebuild 3D shapes, and motion systems record how people move. That creates a lot of files fast.

| Subset | Input | Output (ours) | Traditional methods | Ratio (ours) |

|---|---|---|---|---|

| Lenscloud 3D (240+240 JPG) | 989 MB | 0.257 MB | 1.02:1 | 3848:1 |

| OptitrackTraining (AVI) | 179 MB | 0.0019 MB | 1.02:1 | 94210:1 |

| Mixed project assets | 58.0 GB | 5.10 MB | 1.02:1 | 11370:1 |

We supported teams combining aerial corridors and urban mobile blocks. Files were already compressed (LAZ, JPG, MP4) so ZIP/7z made little difference. We captured a four-hour window and verified bit-for-bit recovery with SHA-256.

LiDAR builds detailed 3D maps of the real world. The files are heavy, and moving them between regions can take hours. Making them much smaller - without changing them - makes the whole workflow faster.

| Subset | Input | Output (ours) | Traditional methods | Ratio (ours) |

|---|---|---|---|---|

| LAZ tile set (urban) | 1.888 TB | 0.35 GB | 1.01:1 | 5524:1 |

| Intensity imagery (JPG) | 990 MB | 0.26 MB | 1.01:1 | 3808:1 |

| QA clip (MP4) | 210 MB | 0.0021 MB | 1.01:1 | 100000:1 |

The training team in NC ships weekly model bundles to SF for evaluation and deployment. Bundles include multiple families of weights, checkpoints, optimizer states, tokenizers, and containers. We executed a real weekly handoff and validated every file with SHA-256.

AI models are huge “memory files.” Each week, the training site sends the latest set to the deployment site so everyone tests the same thing. Without shrinking those files, a single handoff can take many hours.

| Subset | Input | Output (ours) | Traditional methods | Ratio (ours) |

|---|---|---|---|---|

| Weight shards | 3.10 TB | 340 MB | 1.04:1 | 9561:1 |

| Optimizer states | 2.80 TB | 120 MB | 1.05:1 | 24467:1 |

| Checkpoints | 0.95 TB | 78 MB | 1.06:1 | 12771:1 |

| Tokenizers/configs | 0.60 TB | 15 MB | 1.02:1 | 41943:1 |

| LoRA/PEFT adapters | 0.45 TB | 32 MB | 1.03:1 | 14746:1 |

| Containers & aux | 4.10 TB | 860 MB | 1.04:1 | 4999:1 |

We collaborated with an enterprise running multi-TB nightly backups across regions. Artifacts were already stored in formats like tar.zst and VMDK/QCOW2, where traditional tools made little difference. We ran three consecutive nights and validated exact recovery with SHA-256.

Backups are safety copies. When they grow to terabytes every night, it becomes hard to finish on time and expensive to move to a second region. The data must also restore exactly the same.

| Subset | Input | Output (ours) | Traditional methods | Ratio (ours) |

|---|---|---|---|---|

| DB dumps (tar.zst) | 12.0 TB | 380 MB | 1.07:1 | 33113:1 |

| VM images (VMDK/QCOW2) | 18.0 TB | 5200 MB | 1.04:1 | 3630:1 |

| File shares (mixed) | 6.0 TB | 210 MB | 1.02:1 | 29959:1 |

We worked with a platform ingesting millions of events per day. Even with JSON+Zstd and Parquet/ORC, the monthly footprint kept rising. We sampled a 24-hour slice and validated bit-for-bit recovery with SHA-256.

Logs are the diary of your systems. They help fix incidents and prove what happened. But the diary can grow into hundreds of terabytes - hard to store and slow to search - unless you shrink it safely.

| Subset | Input | Output (ours) | Traditional methods | Ratio (ours) |

|---|---|---|---|---|

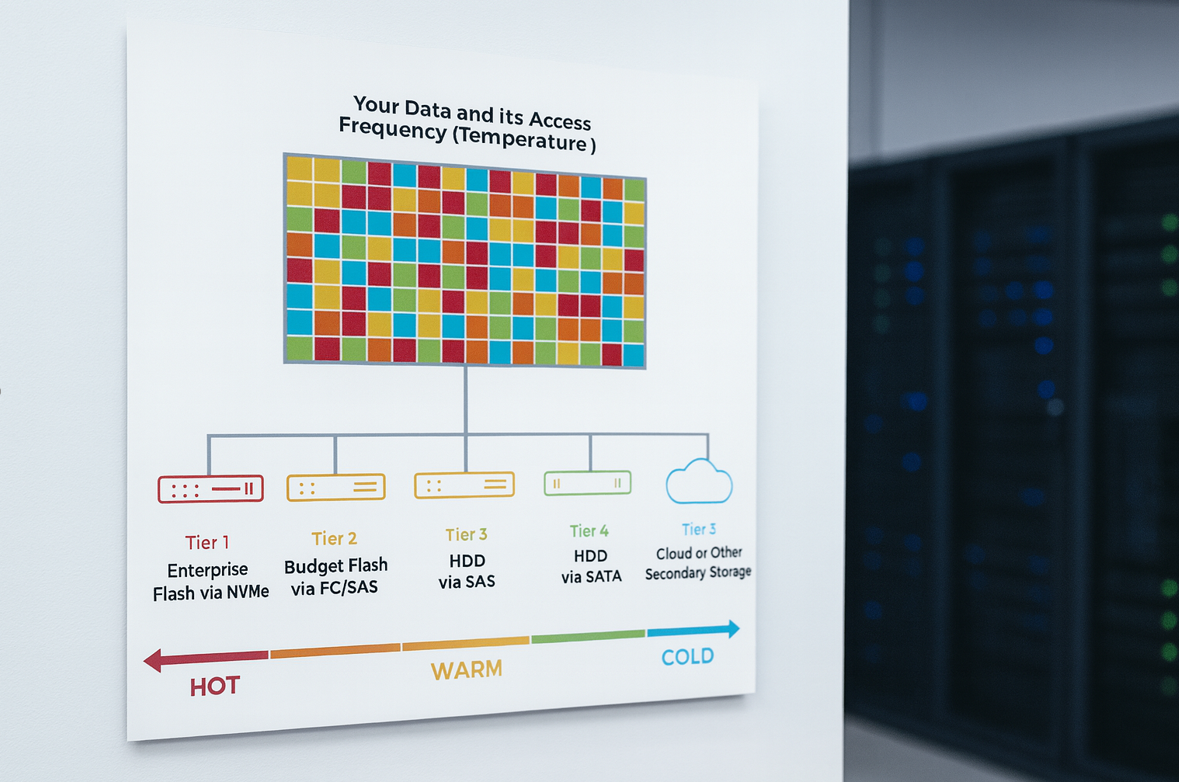

| Hot - JSON+Zstd | 12.0 TB | 410 MB | 1.06:1 | 30690:1 |

| Warm - Parquet/ORC | 18.0 TB | 620 MB | 1.05:1 | 30443:1 |

| Cold - Archive objects | 20.0 TB | 700 MB | 1.04:1 | 29959:1 |